Recent content policy announcements by Meta pose a grave threat to vulnerable communities globally and drastically increase the risk that the company will yet again contribute to mass violence and gross human rights abuses – just like it did in Myanmar in 2017. The company’s significant contribution to the atrocities suffered by the Rohingya people is the subject of a new whistleblower complaint that has just been filed with the Securities and Exchange Commission (SEC).

On January 7, founder and CEO Mark Zuckerberg announced a raft of changes to Meta’s content policies, seemingly aimed at currying favor with the new Trump administration. These include the lifting of prohibitions on previously banned speech, such as the denigration and harassment of racialized minorities. Zuckerberg also announced a drastic shift in content moderation practices – with automated content moderation being significantly rolled back. While these changes have been initially implemented in the US, Meta has signaled that they may be rolled out internationally. This shift marks a clear retreat from the company’s previously stated commitments to responsible content governance.

“I really think this is a precursor for genocide […] We’ve seen it happen. Real people’s lives are actually going to be endangered.

A former Meta employee recently speaking to the platformer

As has been well-documented by Amnesty International and others, Meta’s algorithms prioritize and amplify some of the most harmful content, including advocacy of hatred, misinformation, and content inciting racial violence – all in the name of maximizing ‘user engagement,’ and by extension, profit. Research has shown that these algorithms consistently elevate content that generates strong emotional reactions, often at the cost of human rights and safety. With the removal of existing content safeguards, this situation looks set to go from bad to worse.

As one former Meta employee recently told Platformer, “I really think this is a precursor for genocide […] We’ve seen it happen. Real people’s lives are actually going to be endangered.” This statement echoes the warnings from various human rights experts who have raised concerns about Meta’s role in fuelling mass violence in fragile and conflict-affected societies.

We have seen the horrific consequences of Meta’s recklessness before. In 2017, Myanmar security forces undertook a brutal campaign of ethnic cleansing against Rohingya Muslims. A UN Independent Fact-Finding Commission concluded in 2018 that Myanmar had committed genocide. In the years leading up to these attacks, Facebook had become an echo chamber of virulent anti-Rohingya hatred. The mass dissemination of dehumanizing anti-Rohingya content poured fuel on the fire of long-standing discrimination and helped to create an enabling environment for mass violence. In the absence of appropriate safeguards, Facebook’s toxic algorithms intensified a storm of hatred against the Rohingya, which contributed to these atrocities. According to a report by the United Nations, Facebook was instrumental in the radicalization of local populations and the incitement of violence against the Rohingya.

Rather than learning from its reckless contributions to mass violence in countries including Myanmar and Ethiopia, Meta is instead stripping away important protections that were aimed at preventing any recurrence of such harms.

In enacting these changes, Meta has effectively declared an open season for hate and harassment targeting its most vulnerable and at-risk people, including trans people, migrants, and refugees.

Meta claims to be enacting these changes to advance freedom of expression. While it is true that Meta has wrongfully restricted legitimate content in many cases, this drastic abandonment of existing safeguards is not the answer. The company must take a balanced approach that allows for free expression while safeguarding vulnerable populations.

All companies, including Meta, have clear responsibilities to respect all human rights in line with international human rights standards. Billionaire CEOs cannot simply pick and choose which rights to respect while flagrantly disregarding others and recklessly endangering the rights of millions.

Rather than learning from its reckless contributions to mass violence in countries including Myanmar and Ethiopia, Meta is instead stripping away important protections that were aimed at preventing any recurrence of such harms.

Pat de Brún is Head of Big Tech Accountability at Amnesty International

An investigation by Amnesty International in 2021 found that Meta had “substantially contributed” to the atrocities perpetrated against the Rohingya, and that the company bears a responsibility to provide an effective remedy to the community. However, Meta has made it clear it will take no such action.

Rohingya communities — most of whom were forced from their homes eight years ago and still reside in sprawling refugee camps in neighboring Bangladesh — have also made requests to Meta to remediate them by funding a $1 million education project in the refugee camps. The sum represents just 0.0007% of Meta’s 2023 profits of $134 billion. Despite this, Meta rejected the request. This refusal further demonstrates the company’s lack of accountability and commitment to profit over human dignity.

That is why we – Rohingya atrocity survivor Maung Sawyeddollah, with the support of Amnesty International, the Open Society Justice Initiative, and Victim Advocates International – on January 23, 2025, filed a whistleblower complaint with the SEC. The complaint outlines Meta’s failure to heed multiple civil society warnings from 2013 to 2017 regarding Facebook’s role in fueling violence against the Rohingya. We are asking the agency to investigate Meta for alleged violations of securities laws stemming from the company’s misrepresentations to shareholders in relation to its contribution to the atrocities suffered by the Rohingya in 2017.

Between 2015 and 2017, Meta executives told shareholders that Facebook’s algorithms did not result in polarization, despite warnings that its platform was actively proliferating anti-Rohingya content in Myanmar. At the same time, Meta did not fully disclose to shareholders the risks the company’s operations in Myanmar entailed. Instead, in 2015 and 2016, Meta objected to shareholder proposals to conduct a human rights impact assessment and to set up an internal committee to oversee the company’s policies and practices on international public policy issues, including human rights.

With Zuckerberg and other tech CEOs lining up (literally, in the case of the recent inauguration) behind the new administration’s wide-ranging attacks on human rights, Meta shareholders need to step up and hold the company’s leadership to account to prevent Meta from yet again becoming a conduit for mass violence, or even genocide.

Similarly, legislators and lawmakers in the US must ensure that the SEC retains its neutrality, properly investigate legitimate complaints – such as the one we recently filed, and ensure those who abuse human rights face justice.

Globally, governments and regional bodies such as the EU must redouble their efforts to hold Meta and other Big Tech companies to account for their human rights impacts. As we have seen before, countless human lives could be at risk if companies like Meta are left to their own devices.

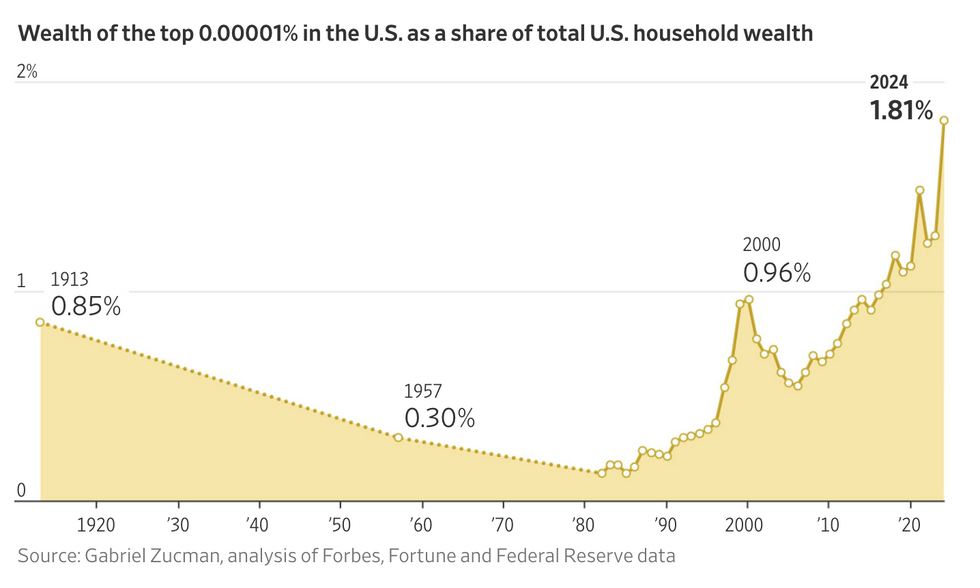

(Source: Gabriel Zucman via The Wall Street Journal)

(Source: Gabriel Zucman via The Wall Street Journal)