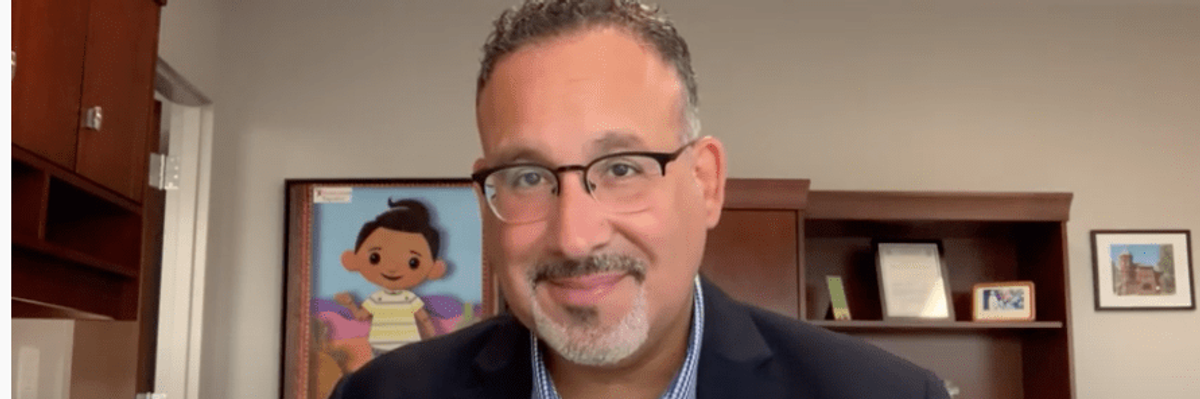

President Elect Joe Biden on Tuesday announced his intention to nominate Miguel Cardona, a former public school principal who is Connecticut's education commissioner, for education secretary. The role is crucial, as Cardona would assume the top position at the Education Department as debates swirl about when and how to safely reopen schools and address inequalities aggravated by the Covid-19 pandemic.

Assuming he is confirmed by the Senate and takes office, Cardona will face a number of difficult decisions. But here's an easy one: He should do everything in his power to keep facial recognition technology out of our schools.

Cardona has been outspoken about racial and class inequalities in the education system, and invasive surveillance technology, like facial recognition, supercharges those injustices. A

major study from the University of Michigan found that the use of facial recognition in education would lead to "exacerbating racism, normalizing surveillance and eroding privacy, narrowing the definition of the 'acceptable' student, commodifying data and institutionalizing inaccuracy." The report's authors recommended an outright ban on using this technology in schools.

They're not alone. The Boston Teachers Union voted to oppose facial recognition in schools and endorsed a citywide ban on the technology. On Tuesday, New York's governor signed into law a bill that bans public and private schools from using or purchasing facial recognition and other biometric technologies. More than 4,000 parents have signed on to

a letter organized by my organization,

Fight for the Future, calling for a ban on facial recognition in schools; the letter warns that automated surveillance would

speed the school-to-prison pipeline and questions the psychological impact of using untested and intrusive artificial intelligence technology on kids in the classroom.

Surveillance breeds conformity and obedience, which hurts our kids' ability to learn and be creative.

We've only begun to see the potential harms associated with facial recognition and algorithmic decision-making; deploying these technologies in the classroom amounts to unethical experimentation on children. And while we don't yet know the full long-term impact, the current effects of these technologies are -- or should be -- setting off human rights alarm bells.

Today's facial recognition algorithms exhibit systemic racial and gender bias, making them more likely to misidentify or incorrectly flag people with darker skin, women and anyone who doesn't conform to gender stereotypes. It is even less accurate on children. In practice, this would mean Black and brown students and LGBTQ students -- as well as parents, faculty members and staff members who are Black, brown and/or LGBTQ -- could be stopped and harassed by school police because of false matches or marked absent from distance learning by automated attendance systems that fail to recognize their humanity. A transgender college student could be locked out of their dorm by a camera that can't identify them. A student activist group could be tracked and punished for organizing a protest.

Surveillance breeds conformity and obedience, which hurts our kids' ability to learn and be creative. Even if the accuracy of facial recognition algorithms improves, the technology is still fundamentally flawed. Experts have suggested that it is so harmful that the risks far outweigh any potential benefits, comparing it to nuclear weapons or lead paint.

It's no surprise, then, that schools that have dabbled in using facial recognition have faced massive backlash from students and civil rights groups. A student-led campaign last year prompted more than 60 of the most prominent colleges and universities in the U.S. to say they won't use facial recognition on their campuses. In perhaps the starkest turnaround, UCLA reversed its plan to implement facial recognition surveillance on campus, instead instituting a policy that bans it entirely.

Deploying these technologies in the classroom amounts to unethical experimentation on children.

But despite the overwhelming backlash and evidence of harm, facial recognition is still creeping into our schools. Surveillance tech vendors have shamelessly exploited the Covid-19 pandemic to promote their ineffective and discriminatory technology, and school officials who are desperate to calm anxious parents and frustrated teachers are increasingly enticed by the promise of technologies that won't actually make schools safer.

An investigation by Wired found that dozens of school districts had purchased temperature monitoring devices that were also equipped with facial recognition. A district in Georgia even purchased thermal imaging cameras from Hikvision, a company that has since been barred from selling its products in the U.S. because of its complicity in human rights violations targeting the Uighur people in China.

Privacy-violating technology has been spreading in districts where students have been learning remotely during the pandemic. Horror stories about monitoring apps that use facial detection, like Proctorio and Honorlock, have gone viral on social media. Students of color taking the bar exam remotely were forced to shine a bright, headache-inducing light into their faces for the entire two-day test, while data about hundreds of thousands of students who used ProctorU leaked this summer.

The use of facial recognition in schools should be banned -- full stop -- which is a job for legislators. We've seen increasing bipartisan interest in Congress, and several prominent lawmakers have proposed a federal ban on law enforcement use of the tech. But passing legislation will take time; facial recognition companies have already been aggressively pushing their software on schools, and kids are being monitored by this technology right now.

It's only going to get worse.