SUBSCRIBE TO OUR FREE NEWSLETTER

Daily news & progressive opinion—funded by the people, not the corporations—delivered straight to your inbox.

5

#000000

#FFFFFF

To donate by check, phone, or other method, see our More Ways to Give page.

Daily news & progressive opinion—funded by the people, not the corporations—delivered straight to your inbox.

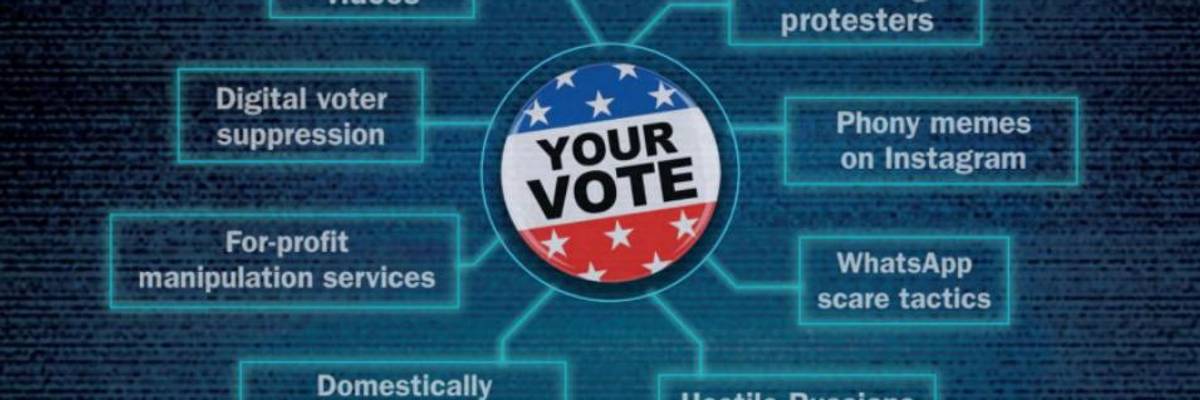

New York University published an report Tuesday entitled Disinformation and the 2020 Election: How the Social Media Should Prepare. (Image: screenshot of report)

A report published Tuesday by New York University warns that fake videos and other misleading or false information could be deployed by domestic and foreign sources in efforts influence the U.S. 2020 presidential election campaign and details strategies to combat such disinformation.

"We urge the companies to prioritize false content related to democratic institutions, starting with elections."

--Paul M. Barrett, report author

The report--entitled Disinformation and the 2020 Election: How the Social Media Should Prepare--predicts that for next year's election, so-called "deepfake" videos will be unleashed across the media landscape "to portray candidates saying and doing things they never said or did" and, as a result, "unwitting Americans could be manipulated into participating in real-world rallies and protests."

Deepfakes, as NPR reported Monday, are "computer-created artificial videos or other digital material in which images are combined to create new footage that depicts events that never actually happened." Manipulated videos like those of Democratic House Speaker Nancy Pelosi (Calif.) that spread virally online earlier this year--often called shallowfakes or cheapfakes--also pose a threat to democratic elections, the report says.

In terms of delivery of disinformation, the NYU report spotlights the messaging service WhatsApp and the video-sharing social media network Instagram--which are both owned by Facebook. A report commissioned by the Senate Intelligence Committee in the wake of the 2016 election accused Russia of "taking a page out of the U.S. voter suppression playbook" by using social media platforms including Facebook and Instagram to target African-American audiences to try to influence their opinions on the candidates in that race.

The NYU report predicts that governments such as Russia, China, and Iran may work to disseminate lies in attempts to sway public opinions regarding the next race for the White House, but "domestic disinformation will prove more prevalent than false content from foreign sources." Digital voter suppression, it warns, could "again be one of the main goals of partisan disinformation."

To combat disinformation from all sources, the NYU report offers nine recommendations for major social media companies:

Paul M. Barrett, the report's author and deputy director of the NYU Stern Center for Business and Human Rights, told The Washington Post that social media companies "have to take responsibility for the way their sites are misused."

"We urge the companies to prioritize false content related to democratic institutions, starting with elections," he said. "And we suggest that they retain clearly marked copies of removed material in a publicly accessible, searchable archive, where false content can be studied by scholars and others, but not shared or retweeted."

While the removal of disinformation by social media giants is touted as a positive strategy by Barrett and others, such calls have sparked censorship concerns, especially as online platforms such as Facebook and YouTube have recently blocked content or shut down accounts that spread accurate information.

Michael Posner, director of NYU's Stern Center, said in a statement to The Hill that "taking steps to combat disinformation isn't just the right thing to do, it's in the social media companies' best interests as well." As he put it, "Fighting disinformation ultimately can help restore their damaged brand reputations and slows demands for governmental content regulation, which creates problems relating to free speech."

One example is what critics of the Trump administration have dubbed the 'Censor the Internet' executive order, which would give federal agencies certain powers to decide what internet material is acceptable. After a draft of that order leaked, as Common Dreams reported last month, "free speech and online advocacy groups raised alarm about the troubling and far-reaching implications of the Trump plan if it was put into effect by executive decree."

A Bloomberg report from Saturday which revealed that "fake news and social media posts are such a threat to U.S. security that the Defense Department is launching a project to repel 'large-scale, automated disinformation attacks'" with custom software sparked additional concerns about potential consequences of U.S. government actions to combat disinformation.

The NYU report does highlight the potential for legislation--particularly the Honest Ads Act, a bipartisan bill reintroduced earlier this year that aims to improve transparency around who is paying for political ads. However, Barrett told The Hill that he doesn't believe the bill "has much of a chance" of passing because of some Senate Republicans' positions on election security legislation.

"Congress would be making a huge contribution if there were hearings, particularly if there were bipartisan hearings... that educate people as to where we've been and what's likely to come," Barrett added. "We need more digital literacy, and Congress could use its position to provide that."

The NYU report adds to mounting concerns among tech experts, politicians, and voters about how disinformation could sway the 2020 election. John Feffer, director of Foreign Policy In Focus at the Institute for Policy Studies, wrote in June about the potential impact of deepfakes on next year's race:

Forget about October surprises. In this age of rapid dissemination of information, the most effective surprises happen in November, just before Election Day. In 2020, the election will take place on November 3. The video drops on November 2. The damage is done before damage control can even begin.

Feffer added that artificial intelligence (AI) systems which are "designed to root out such deepfake videos can't keep up with the evil geniuses that are employing other AI programs to produce them."

"It's an arms race," he wrote. "And the bad guys are winning."

Dear Common Dreams reader, The U.S. is on a fast track to authoritarianism like nothing I've ever seen. Meanwhile, corporate news outlets are utterly capitulating to Trump, twisting their coverage to avoid drawing his ire while lining up to stuff cash in his pockets. That's why I believe that Common Dreams is doing the best and most consequential reporting that we've ever done. Our small but mighty team is a progressive reporting powerhouse, covering the news every day that the corporate media never will. Our mission has always been simple: To inform. To inspire. And to ignite change for the common good. Now here's the key piece that I want all our readers to understand: None of this would be possible without your financial support. That's not just some fundraising cliche. It's the absolute and literal truth. We don't accept corporate advertising and never will. We don't have a paywall because we don't think people should be blocked from critical news based on their ability to pay. Everything we do is funded by the donations of readers like you. Will you donate now to help power the nonprofit, independent reporting of Common Dreams? Thank you for being a vital member of our community. Together, we can keep independent journalism alive when it’s needed most. - Craig Brown, Co-founder |

A report published Tuesday by New York University warns that fake videos and other misleading or false information could be deployed by domestic and foreign sources in efforts influence the U.S. 2020 presidential election campaign and details strategies to combat such disinformation.

"We urge the companies to prioritize false content related to democratic institutions, starting with elections."

--Paul M. Barrett, report author

The report--entitled Disinformation and the 2020 Election: How the Social Media Should Prepare--predicts that for next year's election, so-called "deepfake" videos will be unleashed across the media landscape "to portray candidates saying and doing things they never said or did" and, as a result, "unwitting Americans could be manipulated into participating in real-world rallies and protests."

Deepfakes, as NPR reported Monday, are "computer-created artificial videos or other digital material in which images are combined to create new footage that depicts events that never actually happened." Manipulated videos like those of Democratic House Speaker Nancy Pelosi (Calif.) that spread virally online earlier this year--often called shallowfakes or cheapfakes--also pose a threat to democratic elections, the report says.

In terms of delivery of disinformation, the NYU report spotlights the messaging service WhatsApp and the video-sharing social media network Instagram--which are both owned by Facebook. A report commissioned by the Senate Intelligence Committee in the wake of the 2016 election accused Russia of "taking a page out of the U.S. voter suppression playbook" by using social media platforms including Facebook and Instagram to target African-American audiences to try to influence their opinions on the candidates in that race.

The NYU report predicts that governments such as Russia, China, and Iran may work to disseminate lies in attempts to sway public opinions regarding the next race for the White House, but "domestic disinformation will prove more prevalent than false content from foreign sources." Digital voter suppression, it warns, could "again be one of the main goals of partisan disinformation."

To combat disinformation from all sources, the NYU report offers nine recommendations for major social media companies:

Paul M. Barrett, the report's author and deputy director of the NYU Stern Center for Business and Human Rights, told The Washington Post that social media companies "have to take responsibility for the way their sites are misused."

"We urge the companies to prioritize false content related to democratic institutions, starting with elections," he said. "And we suggest that they retain clearly marked copies of removed material in a publicly accessible, searchable archive, where false content can be studied by scholars and others, but not shared or retweeted."

While the removal of disinformation by social media giants is touted as a positive strategy by Barrett and others, such calls have sparked censorship concerns, especially as online platforms such as Facebook and YouTube have recently blocked content or shut down accounts that spread accurate information.

Michael Posner, director of NYU's Stern Center, said in a statement to The Hill that "taking steps to combat disinformation isn't just the right thing to do, it's in the social media companies' best interests as well." As he put it, "Fighting disinformation ultimately can help restore their damaged brand reputations and slows demands for governmental content regulation, which creates problems relating to free speech."

One example is what critics of the Trump administration have dubbed the 'Censor the Internet' executive order, which would give federal agencies certain powers to decide what internet material is acceptable. After a draft of that order leaked, as Common Dreams reported last month, "free speech and online advocacy groups raised alarm about the troubling and far-reaching implications of the Trump plan if it was put into effect by executive decree."

A Bloomberg report from Saturday which revealed that "fake news and social media posts are such a threat to U.S. security that the Defense Department is launching a project to repel 'large-scale, automated disinformation attacks'" with custom software sparked additional concerns about potential consequences of U.S. government actions to combat disinformation.

The NYU report does highlight the potential for legislation--particularly the Honest Ads Act, a bipartisan bill reintroduced earlier this year that aims to improve transparency around who is paying for political ads. However, Barrett told The Hill that he doesn't believe the bill "has much of a chance" of passing because of some Senate Republicans' positions on election security legislation.

"Congress would be making a huge contribution if there were hearings, particularly if there were bipartisan hearings... that educate people as to where we've been and what's likely to come," Barrett added. "We need more digital literacy, and Congress could use its position to provide that."

The NYU report adds to mounting concerns among tech experts, politicians, and voters about how disinformation could sway the 2020 election. John Feffer, director of Foreign Policy In Focus at the Institute for Policy Studies, wrote in June about the potential impact of deepfakes on next year's race:

Forget about October surprises. In this age of rapid dissemination of information, the most effective surprises happen in November, just before Election Day. In 2020, the election will take place on November 3. The video drops on November 2. The damage is done before damage control can even begin.

Feffer added that artificial intelligence (AI) systems which are "designed to root out such deepfake videos can't keep up with the evil geniuses that are employing other AI programs to produce them."

"It's an arms race," he wrote. "And the bad guys are winning."

A report published Tuesday by New York University warns that fake videos and other misleading or false information could be deployed by domestic and foreign sources in efforts influence the U.S. 2020 presidential election campaign and details strategies to combat such disinformation.

"We urge the companies to prioritize false content related to democratic institutions, starting with elections."

--Paul M. Barrett, report author

The report--entitled Disinformation and the 2020 Election: How the Social Media Should Prepare--predicts that for next year's election, so-called "deepfake" videos will be unleashed across the media landscape "to portray candidates saying and doing things they never said or did" and, as a result, "unwitting Americans could be manipulated into participating in real-world rallies and protests."

Deepfakes, as NPR reported Monday, are "computer-created artificial videos or other digital material in which images are combined to create new footage that depicts events that never actually happened." Manipulated videos like those of Democratic House Speaker Nancy Pelosi (Calif.) that spread virally online earlier this year--often called shallowfakes or cheapfakes--also pose a threat to democratic elections, the report says.

In terms of delivery of disinformation, the NYU report spotlights the messaging service WhatsApp and the video-sharing social media network Instagram--which are both owned by Facebook. A report commissioned by the Senate Intelligence Committee in the wake of the 2016 election accused Russia of "taking a page out of the U.S. voter suppression playbook" by using social media platforms including Facebook and Instagram to target African-American audiences to try to influence their opinions on the candidates in that race.

The NYU report predicts that governments such as Russia, China, and Iran may work to disseminate lies in attempts to sway public opinions regarding the next race for the White House, but "domestic disinformation will prove more prevalent than false content from foreign sources." Digital voter suppression, it warns, could "again be one of the main goals of partisan disinformation."

To combat disinformation from all sources, the NYU report offers nine recommendations for major social media companies:

Paul M. Barrett, the report's author and deputy director of the NYU Stern Center for Business and Human Rights, told The Washington Post that social media companies "have to take responsibility for the way their sites are misused."

"We urge the companies to prioritize false content related to democratic institutions, starting with elections," he said. "And we suggest that they retain clearly marked copies of removed material in a publicly accessible, searchable archive, where false content can be studied by scholars and others, but not shared or retweeted."

While the removal of disinformation by social media giants is touted as a positive strategy by Barrett and others, such calls have sparked censorship concerns, especially as online platforms such as Facebook and YouTube have recently blocked content or shut down accounts that spread accurate information.

Michael Posner, director of NYU's Stern Center, said in a statement to The Hill that "taking steps to combat disinformation isn't just the right thing to do, it's in the social media companies' best interests as well." As he put it, "Fighting disinformation ultimately can help restore their damaged brand reputations and slows demands for governmental content regulation, which creates problems relating to free speech."

One example is what critics of the Trump administration have dubbed the 'Censor the Internet' executive order, which would give federal agencies certain powers to decide what internet material is acceptable. After a draft of that order leaked, as Common Dreams reported last month, "free speech and online advocacy groups raised alarm about the troubling and far-reaching implications of the Trump plan if it was put into effect by executive decree."

A Bloomberg report from Saturday which revealed that "fake news and social media posts are such a threat to U.S. security that the Defense Department is launching a project to repel 'large-scale, automated disinformation attacks'" with custom software sparked additional concerns about potential consequences of U.S. government actions to combat disinformation.

The NYU report does highlight the potential for legislation--particularly the Honest Ads Act, a bipartisan bill reintroduced earlier this year that aims to improve transparency around who is paying for political ads. However, Barrett told The Hill that he doesn't believe the bill "has much of a chance" of passing because of some Senate Republicans' positions on election security legislation.

"Congress would be making a huge contribution if there were hearings, particularly if there were bipartisan hearings... that educate people as to where we've been and what's likely to come," Barrett added. "We need more digital literacy, and Congress could use its position to provide that."

The NYU report adds to mounting concerns among tech experts, politicians, and voters about how disinformation could sway the 2020 election. John Feffer, director of Foreign Policy In Focus at the Institute for Policy Studies, wrote in June about the potential impact of deepfakes on next year's race:

Forget about October surprises. In this age of rapid dissemination of information, the most effective surprises happen in November, just before Election Day. In 2020, the election will take place on November 3. The video drops on November 2. The damage is done before damage control can even begin.

Feffer added that artificial intelligence (AI) systems which are "designed to root out such deepfake videos can't keep up with the evil geniuses that are employing other AI programs to produce them."

"It's an arms race," he wrote. "And the bad guys are winning."